Component

GRAID: A Data Protection Solution for NVMe SSDs

Preface

The Rise of NVMe

Existing NVMe Data Protection Solutions

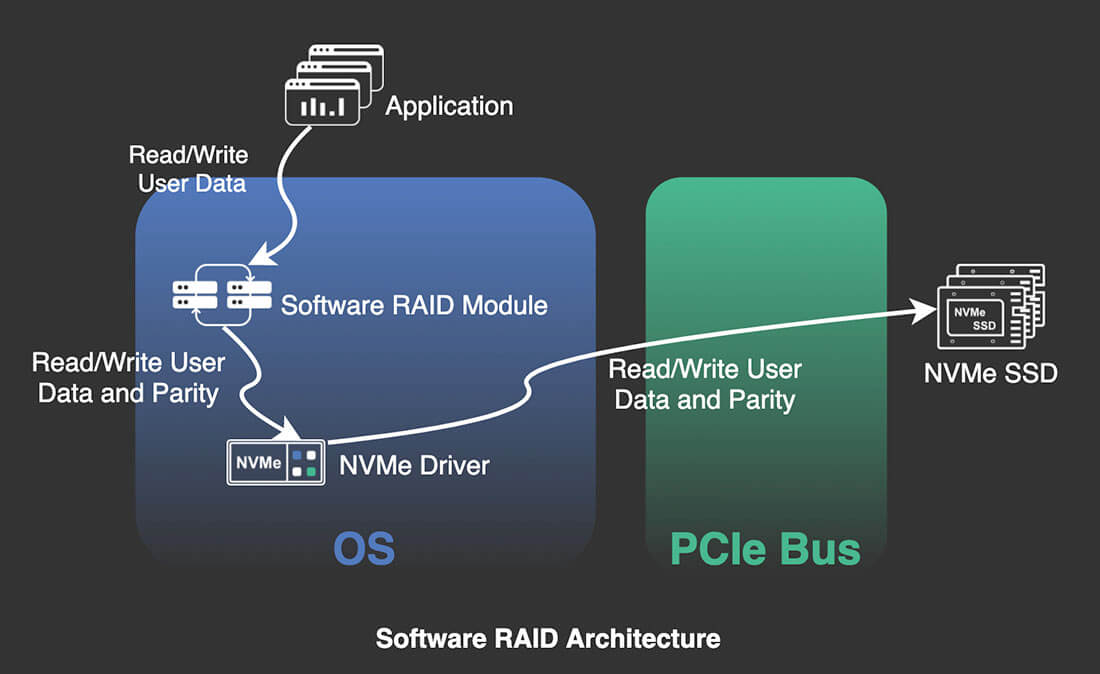

Software RAID

Software RAID Architecture

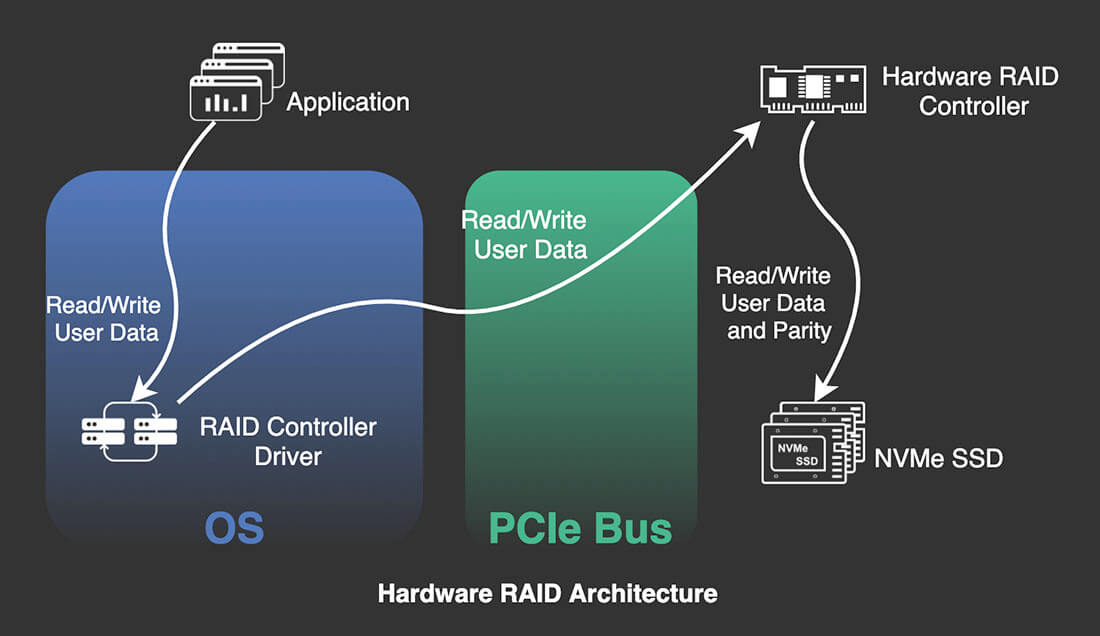

Hardware RAID

Hardware RAID Architecture

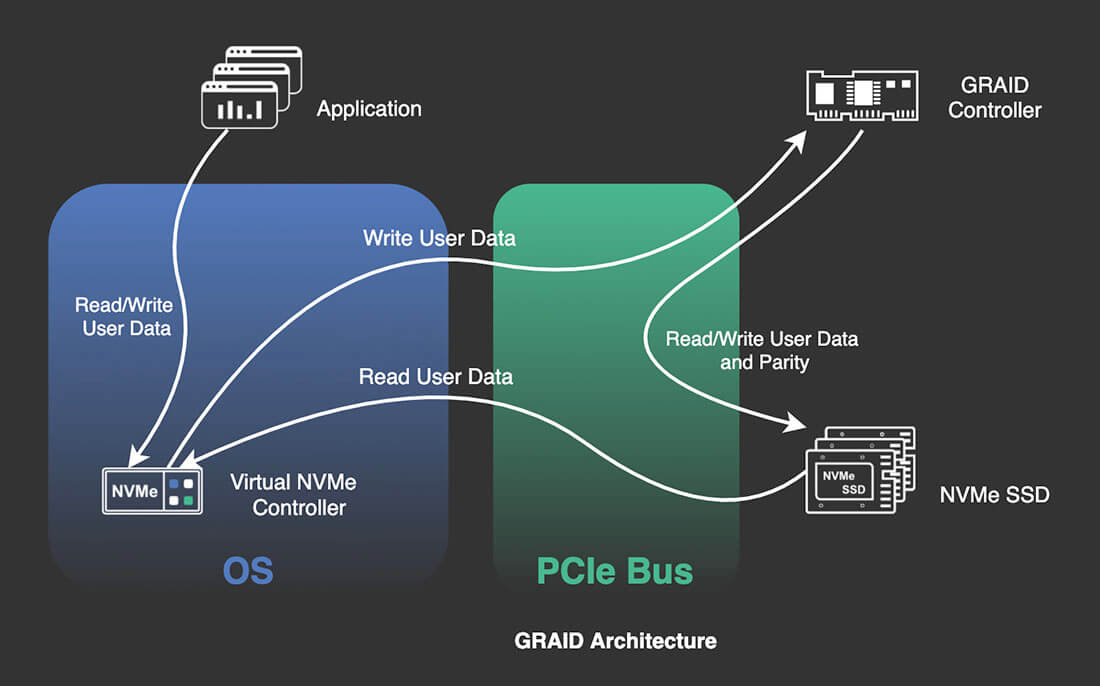

GRAID – The Next Generation of NVMe RAID Technology

GRAID Architecture

Test Case

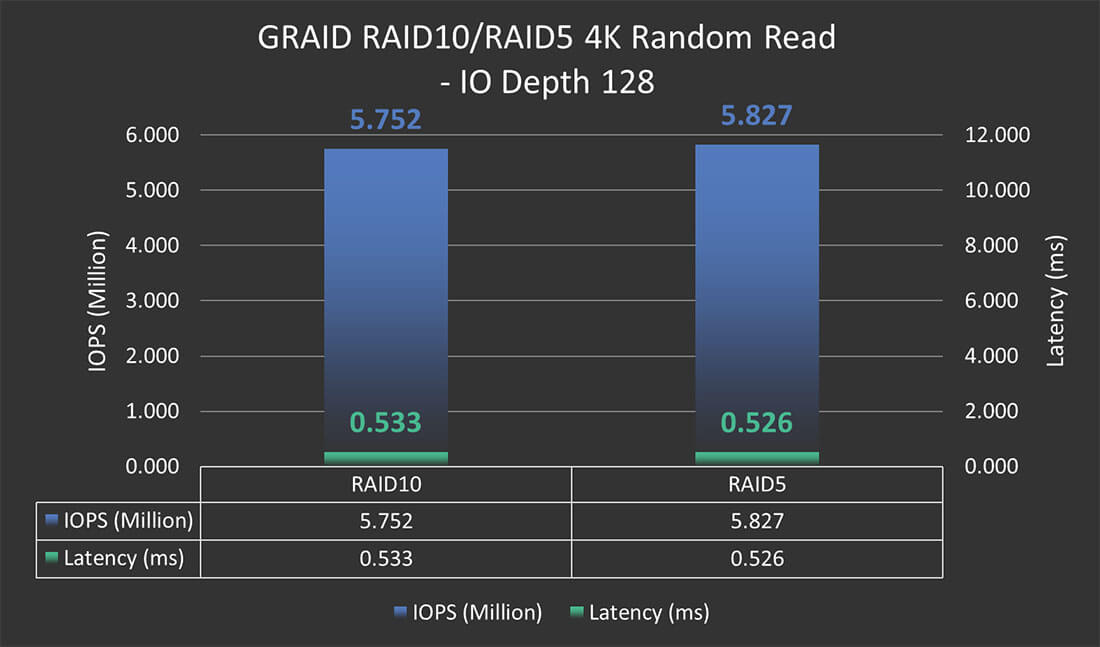

Test Result

Figure 4: GRAID 4K Random Read

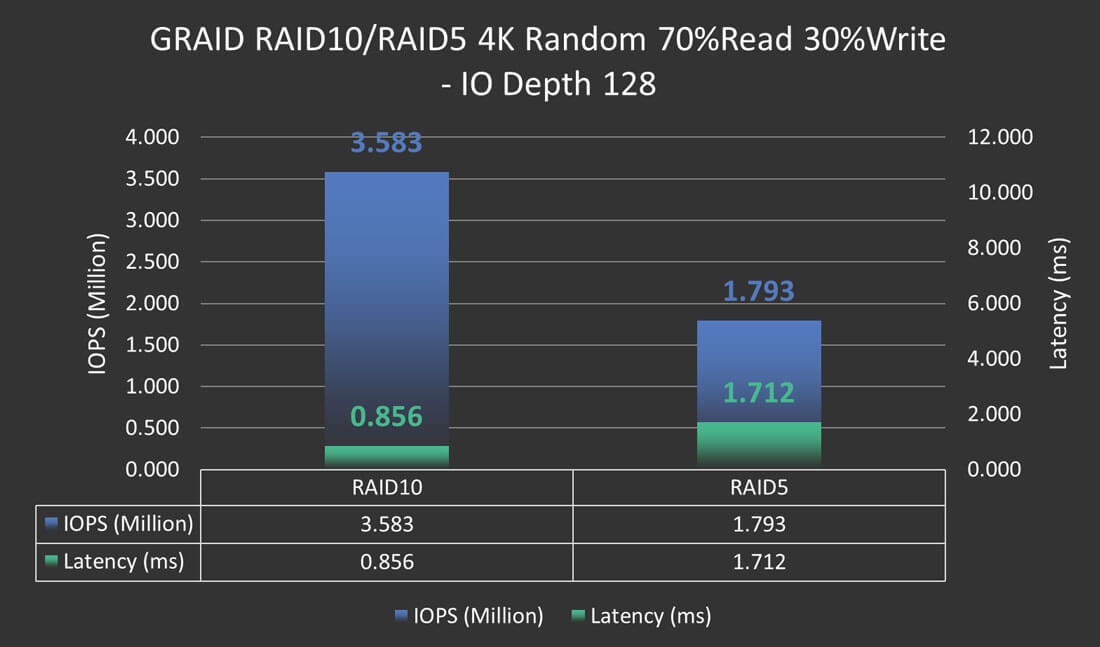

Figure 5: GRAID 4K Random Read/Write

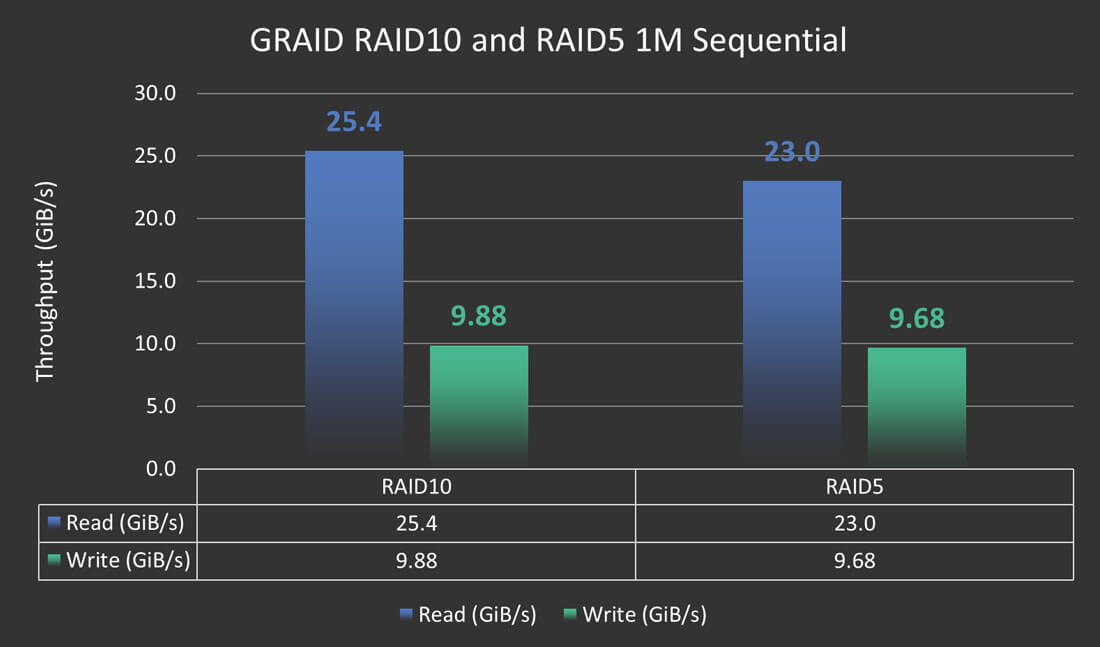

Figure 6: GRAID RAID10/RAID5 1M Sequential

GIGABYTE All-Flash Server

Conclusion

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

R282-Z92 Rack Server

R282-Z92 Rack Server