Tech-Guide

Setting the Record Straight: What is HPC? A Tech Guide by GIGABYTE

The term HPC, which stands for high performance computing, gets thrown around a lot nowadays, as server solutions become more and more ubiquitous. It is running the risk of becoming a catchall phrase: anything that is “HPC” must be the right choice for your computing needs. You may be wondering: what exactly are the benefits of HPC, and is HPC right for you? GIGABYTE Technology, an industry leader in high-performance servers, presents this tech guide to help you understand what HPC means on both a theoretical and a practical level. In doing so, we hope to help you evaluate if HPC is right for you, while demonstrating what GIGABYTE has to offer in the field of HPC.

HPC: Supercomputing Made Accessible and Achievable

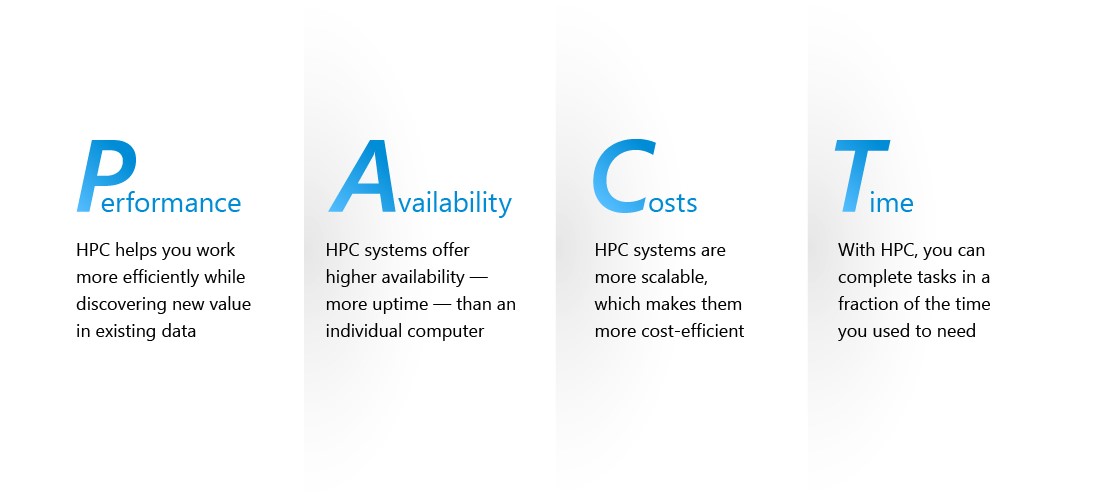

PACT: Remember the Benefits of HPC with this Acronym

● Performance

● Availability

● Costs

Here is a simple acronym to help you remember the four key benefits of HPC: PACT, which stands for performance, availability, costs, and time. They are the reasons why HPC is having a profound impact on the way we live, work, and play.

● Time

HPC Use Cases: How HPC Has Changed Our Lives

● Weather Simulation and Disaster Prevention

● Autonomous Vehicles

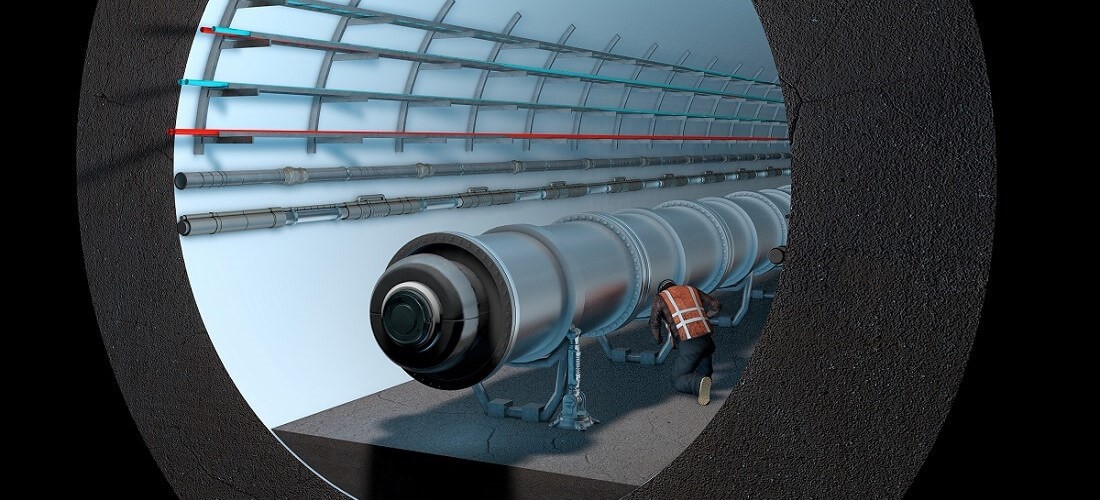

Renowned research institutes are using HPC to achieve astounding breakthroughs. For example, CERN uses GIGABYTE G482-Z51 to process the 40 terabytes of data generated by the Large Hadron Collider every second to search for the beauty quark.

● Energy

● Virtual and Augmented Reality

● Space Exploration, Quantum Physics, and More

Whether it is research in climate change, the COVID-19 virus, space exploration, or any other field of human knowledge, HPC has a role to play. This is why enterprises and universities alike are building their own HPC systems or renting HPC services.

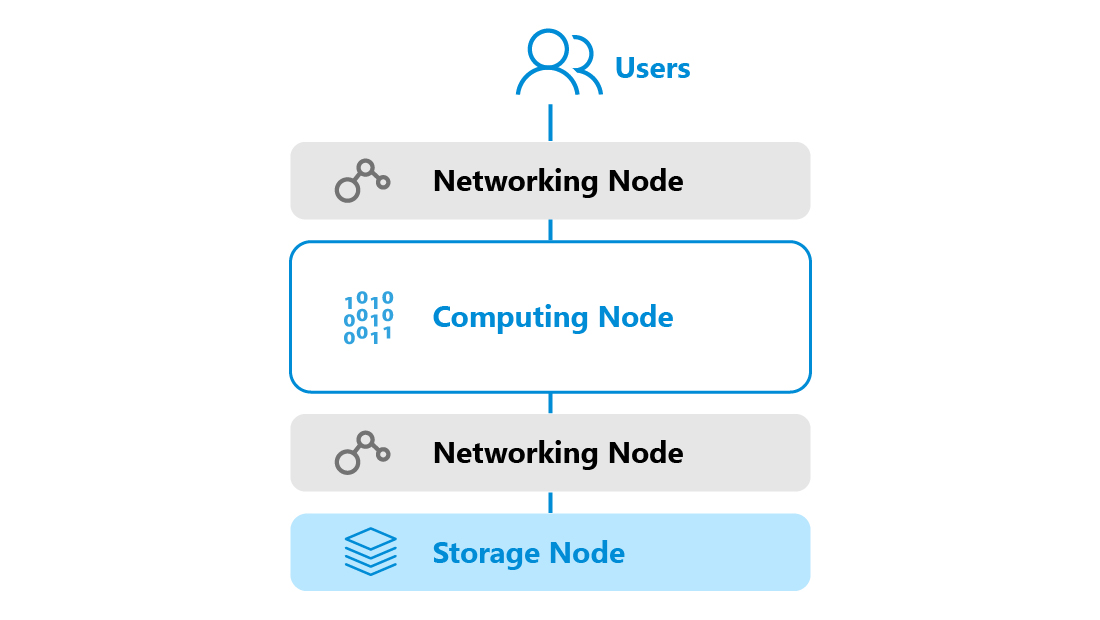

What are the Components of an HPC System?

This simple infographic shows that an HPC system is generally composed of the same three primary layers as a data center. There is the networking node, which connects the nodes to each other and the entire system to the outside world. There is the computing node, which performs the calculations. And, there is the storage node, which stores all the data.

Conclusion: Three Steps to Find Out if HPC is Right for You

Recommended GIGABYTE Server Solutions

GIGABYTE has a full line of server solutions for HPC applications. Worthy of note are H-Series High Density Servers for incredible processing prowess in a compact form factor; G-Series GPU Servers for use with GPGPU accelerators; versatile R-Series Rack Servers; S-Series Storage Servers for safeguarding your data; and W-Series Tower Servers / Workstations for installation outside of server racks.

● H-Series High Density Servers

● G-Series GPU Servers

● R-Series Rack Servers

● S-Series Storage Servers

● W-Series Tower Servers / Workstations

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Supercomputing

# HPC

# Cloud Computing

# Data Center

# Artificial Intelligence (AI)

# Deep Learning (DL)

# Machine Learning Operations (MLOps)

# Metaverse

# Computer Vision

# 5G

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates