Tech-Guide

What is a Server? A Tech Guide by GIGABYTE

In the modern age, we enjoy an incredible amount of computing power—not because of any device that we own, but because of the servers we are connected to. They handle all our myriad requests, whether it is to send an email, play a game, or find a restaurant. They are the inventions that make our intrinsically connected age of digital information possible. But what, exactly, is a server? GIGABYTE Technology, an industry leader in high-performance servers, presents our latest Tech Guide. We delve into what a server is, how it works, and what exciting new breakthroughs GIGABYTE has made in the field of server solutions.

1. What is a Server?

2. How Does a Server Work?

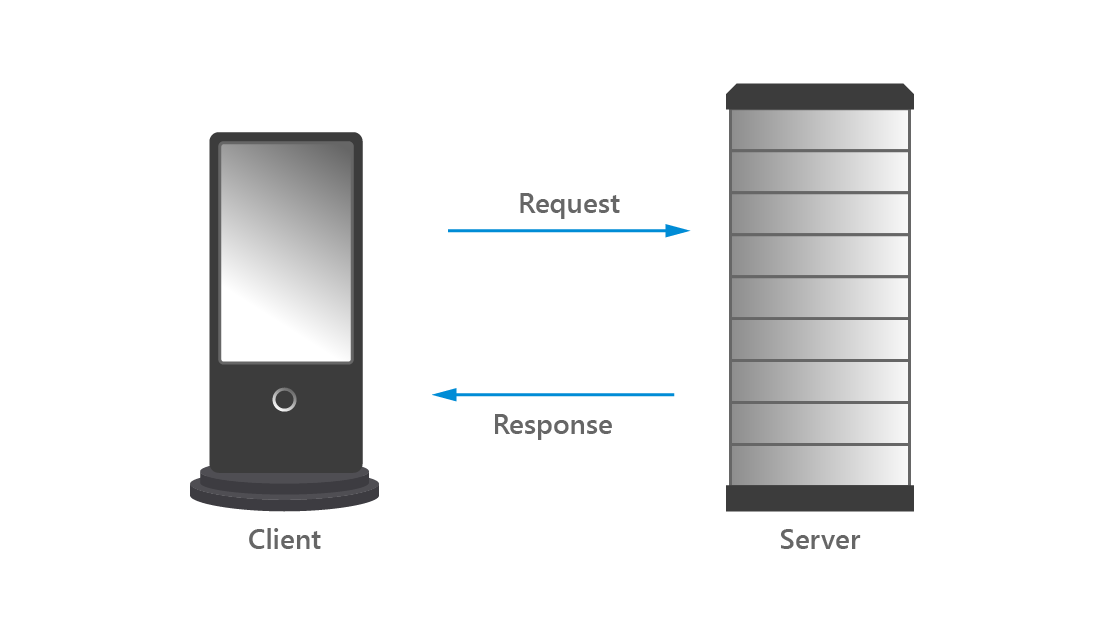

In the standard request-response model, which is the foundation of the modern client-server architecture, a client device initiates communication by making a request on the network. A server picks up the request and provides the appropriate response, thus completing the dialogue.

3. What are the Different Types of Servers?

4. How Have Servers Changed Over Time?

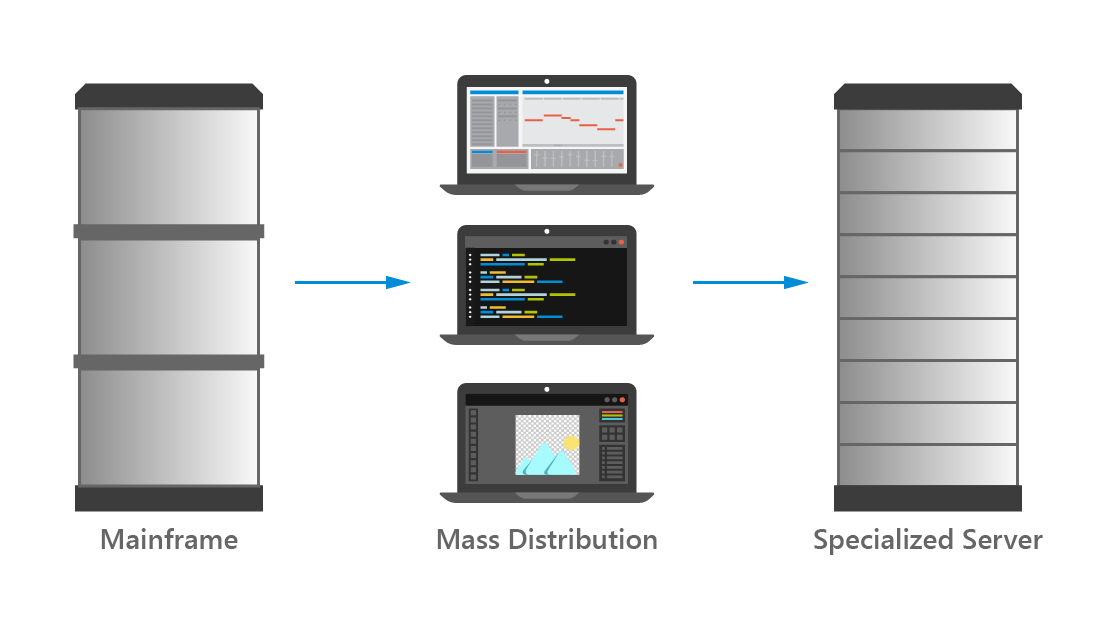

Mainframes used to house all the processing power, until advances in manufacturing made it possible for normal computers to operate independently. Now, technological advancements have caused computational resources to become centralized again. The difference is, they have taken the form of specialized servers, designed to provide resources or services for wirelessly connected client devices.

5. GIGABYTE’s Rack-Mounted Server Solutions

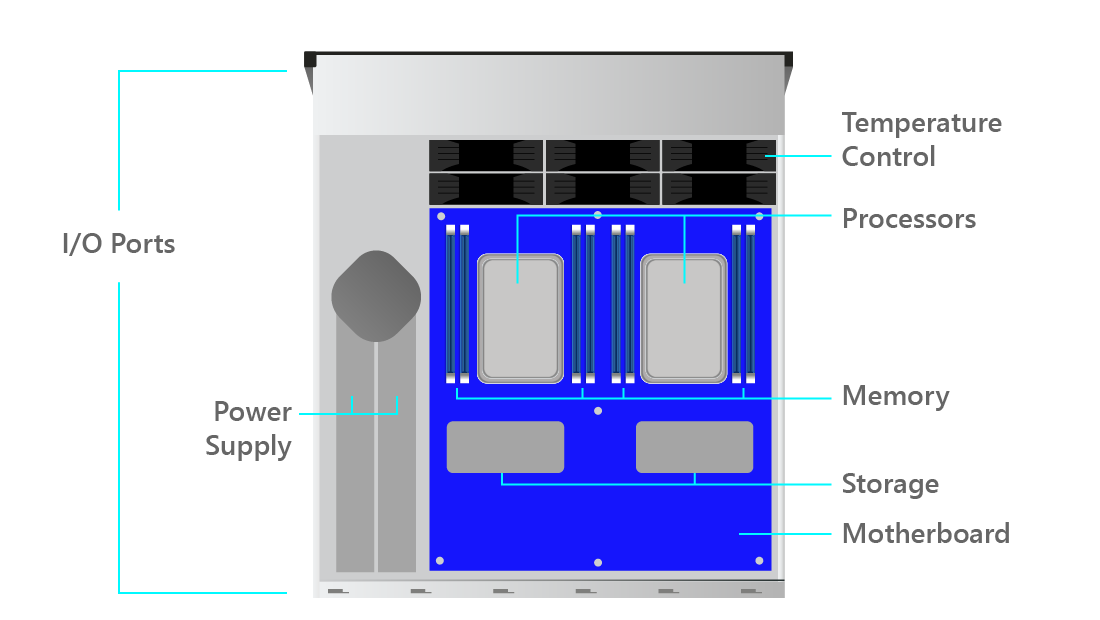

The inside of every server looks different, but in this rough diagram, you can see the seven primary components of a rack-mounted server: motherboard, processors, memory, storage, I/O ports, power supply, and temperature control. GIGABYTE selects components of the highest quality and creates server solutions best suited for clients in different industries.

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Immersion Cooling

# Cloud Computing

# Virtual Machine (VM)

# 5G

# Artificial Intelligence (AI)

# Machine learning (ML)

# Deep Learning (DL)

# Data Center

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates