AI-AIoT

10 Frequently Asked Questions about Artificial Intelligence

Artificial intelligence. The world is abuzz with its name, yet how much do you know about this exciting new trend that is reshaping our world and history? Fret not, friends; GIGABYTE Technology has got you covered. Here is what you need to know about the ins and outs of AI, presented in 10 bite-sized Q and A’s that are fast to read and easy to digest!

1. What is artificial intelligence (AI)?

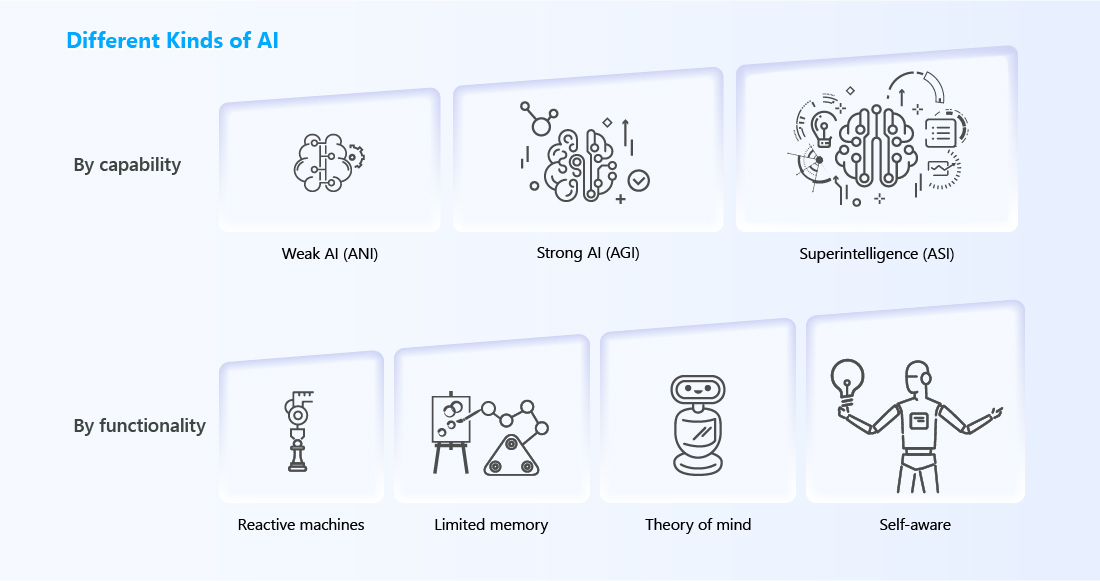

2. What are the different types of AI?

To better understand AI, we can separate them by “capability” and “functionality”. Currently, even the most advanced forms of AI are still a far cry from the kind of AI depicted in science fiction.

3. How does AI work?

4. How can I benefit from AI?

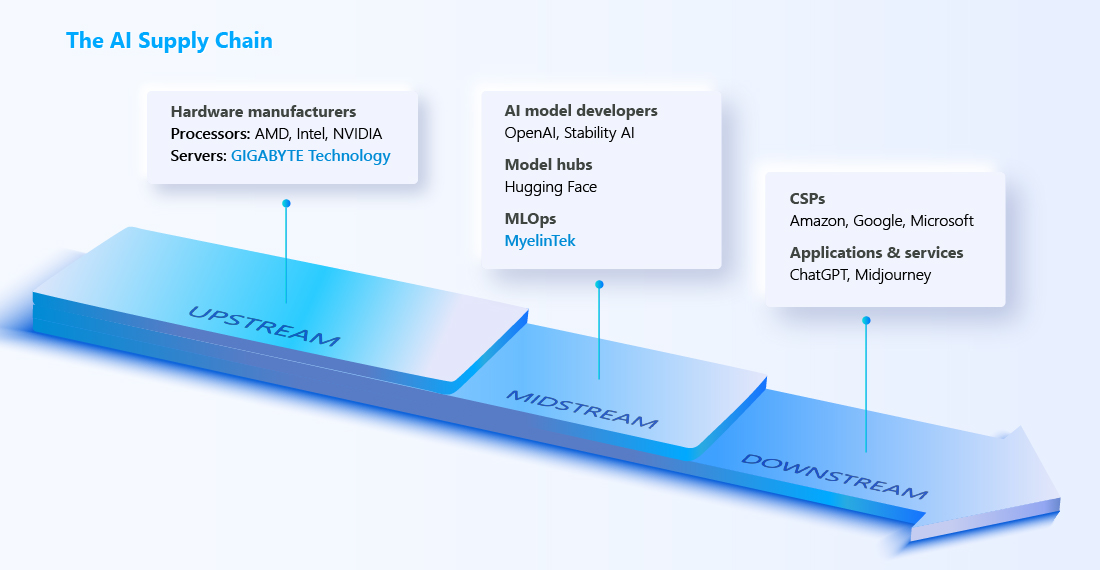

5. What kind of people work with AI?

Here are some examples of the key players on the AI supply chain. GIGABYTE provides not only server solutions but also MLOps services through its investee company MyelinTek.

6. What kind of computers are used for AI?

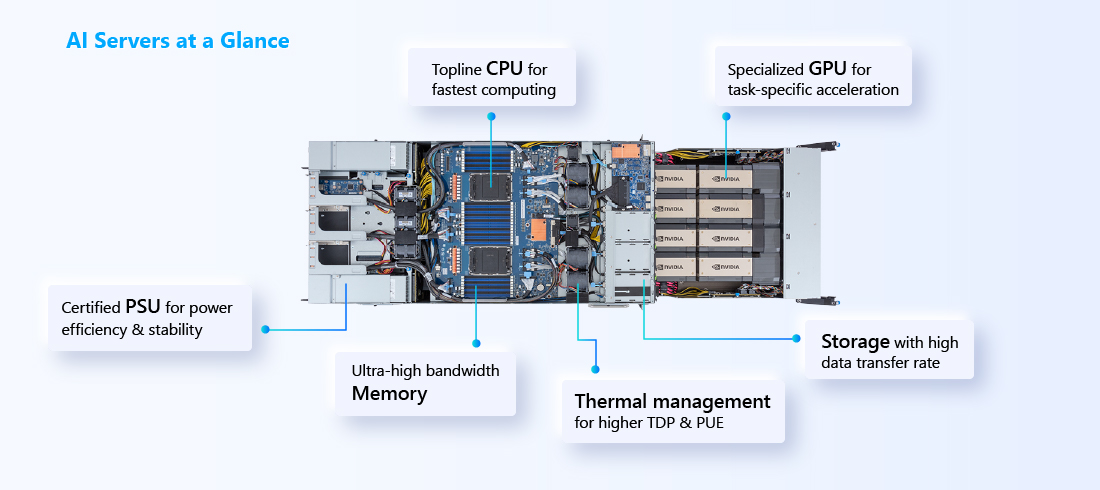

7. What is an AI server?

Simply put, an AI server uses state-of-the-art processors and other components to support AI development and application with the most powerful computing performance.

8. What is GIGABYTE Technology’s role in advancing AI?

9. Who are some of the major developers of AI?

10. What is the future of AI?

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Artificial Intelligence (AI)

# Machine learning (ML)

# Natural Language Processing (NLP)

# AI Training

# AI Inference

# Deep Learning (DL)

# Generative AI (GenAI)

# Supercomputing

# Computer Vision

# Cloud Computing

# ADAS (Advanced Driver Assistance Systems)

# Machine Learning Operations (MLOps)

# Data Center

# PUE

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates