Tech-Guide

How to Pick the Right Server for AI? Part Two: Memory, Storage, and More

The proliferation of tools and services empowered by artificial intelligence has made the procurement of “AI servers” a priority for organizations big and small. In Part Two of GIGABYTE Technology’s Tech Guide on choosing an AI server, we look at six other vital components besides the CPU and GPU that can transform your server into a supercomputing powerhouse.

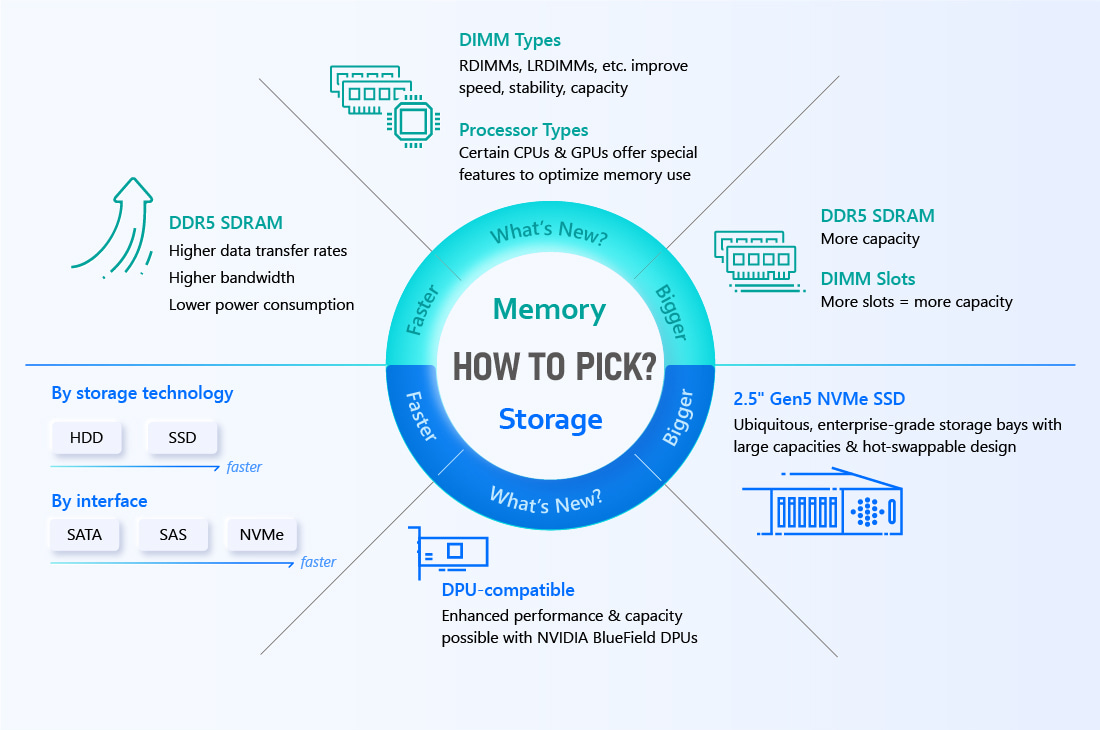

How to Pick the Right Memory for Your AI Server?

How to Pick the Right Storage for Your AI Server?

While memory and storage serve different functions, there are comparable rules of thumb when it comes to choosing the right ones for your AI supercomputing platform.

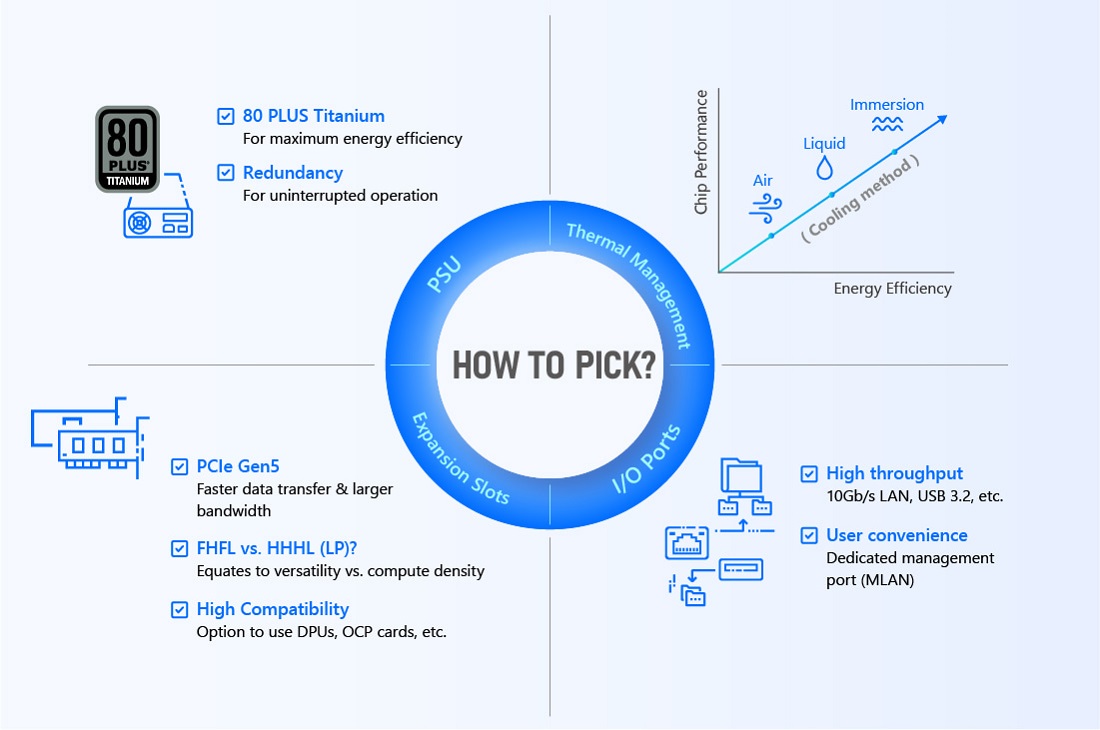

How to Pick the Right Power Supply Unit for Your AI Server?

How to Pick the Right Thermal Management for Your AI Server?

Here are some simple guidelines on how to choose power supply units, thermal management, expansion slots, and I/O ports for your AI server.

How to Pick the Right Expansion Slots for Your AI Server?

How to Pick the Right I/O Ports for Your AI Server?

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates

# Open Compute Project (OCP)

# Artificial Intelligence (AI)

# AI Training

# Supercomputing

# Data Center

# Immersion Cooling

# Generative AI (GenAI)

# HPC

# PUE

Get the inside scoop on the latest tech trends, subscribe today!

Get Updates